Bert for Different Tasks

The beauty of BERT lies in its ability to adapt to a wide range of tasks with minimal architectural changes leveraging...

Bert for Different Tasks:🔗

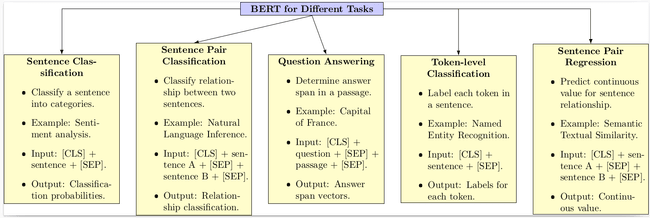

BERT is versatile and can be adapted for various NLP tasks. The original BERT paper demonstrates how to use BERT for different tasks by slightly modifying the model's architecture. Here are the primary tasks and how BERT is utilized for each:

-

Sentence Classification:

- Task: Given a single sentence, classify it into one of several categories.

- Example: Sentiment analysis where a sentence is classified as positive, negative, or neutral.

- Input: [CLS] + sentence + [SEP]

- Output: The [CLS] token's representation is taken and fed into a softmax layer to produce the classification probabilities.

-

Sentence Pair Classification:

- Task: Given two sentences, classify the relationship between them.

- Example: Natural Language Inference (NLI) where two sentences are classified as entailment, contradiction, or neutral.

- Input: [CLS] + sentence A + [SEP] + sentence B + [SEP]

- Output: The [CLS] token's representation is taken and fed into a softmax layer to classify the relationship.

-

Question Answering:

- Task: Given a passage and a question, determine the answer span within the passage.

- Example: "Question: What is the capital of France? Passage: Paris is the capital of France."

- Input: [CLS] + question + [SEP] + passage + [SEP]

- Output: Two vectors are produced from the representations of each token in the passage: one for the start and one for the end of the answer span. These vectors are used to determine the span of the answer in the passage.

-

Token-level Classification:

- Task: Assign a label to each token in a sentence.

- Example: Named Entity Recognition (NER) where tokens in a sentence are classified as person, organization, location, etc.

- Input: [CLS] + sentence + [SEP]

- Output: Each token's representation in the sentence is fed into a softmax layer to determine its label.

-

Sentence Pair Regression:

- Task: Given two sentences, predict a continuous value representing their relationship.

- Example: Semantic Textual Similarity (STS) where the model predicts how similar two sentences are on a scale.

- Input: [CLS] + sentence A + [SEP] + sentence B + [SEP]

- Output: The [CLS] token's representation is taken and fed into a dense layer to produce a continuous value.

For all these tasks, BERT's pre-trained weights serve as initialization, and the model is fine-tuned on task-specific data. The beauty of BERT lies in its ability to adapt to a wide range of tasks with minimal architectural changes, leveraging the rich representations it learned during pre-training.

Bert for Question Answering Task:🔗

Training Phase:🔗

-

Input Preparation:

- Given a passage and an associated question, the input is formed as: [CLS] + question + [SEP] + passage + [SEP].

- The ground truth answer is provided as a span in the passage.

-

BERT's Output:

- After passing the input through BERT, every token in the passage gets a representation.

- Two separate dense layers are applied to each token's representation: one predicts the start position and the other predicts the end position of the answer span.

-

Loss Calculation:

- The model's predicted start and end positions are compared to the ground truth start and end positions.

- Cross-entropy loss is calculated for both the start and end positions and is combined to form the total loss for the example.

Example for Training:🔗

- Question: "What is the capital of France?"

- Passage: "Paris is the capital of France. It is known for the Eiffel Tower."

- Ground Truth Answer: "Paris"

The input to BERT would be: [CLS] What is the capital of France? [SEP] Paris is the capital of France. It is known for the Eiffel Tower. [SEP]

The ground truth start position for the answer is the token "Paris", and the end position is the same token "Paris". The model will aim to predict these positions correctly during training.

Inference Phase:🔗

-

Input Preparation:

- Similar to training, the question and passage are passed as: [CLS] + question + [SEP] + passage + [SEP].

-

Predicting Start and End Positions:

- After processing the input, BERT provides a start position score and an end position score for each token in the passage.

- The token with the highest start position score is predicted as the start of the answer span, and the token with the highest end position score is predicted as the end of the answer span.

-

Extracting Answer:

- Once the start and end positions are identified, the tokens between (and including) these positions form the predicted answer.

Example for Inference:🔗

- Question: "What is the capital of France?"

- Passage: "Paris is the capital of France. It is known for the Eiffel Tower."

After processing, if BERT predicts the start position as the token "Paris" and the end position as the same token "Paris", the predicted answer would be "Paris".

In the Question Answering task with BERT, the main idea is predicting the correct start and end positions of the answer within the passage. The training ensures that these predictions are as accurate as possible, and during inference, these predictions are used to extract the answer span.

Bert for Sentence Pair Regression:🔗

Sentence Pair Regression involves predicting a continuous value based on the relationship between two sentences. This is commonly used in tasks like Semantic Textual Similarity (STS), where the objective is to measure the similarity between two sentences.

Training Phase:🔗

-

Input Preparation:

- Two sentences are provided as input.

- The input is formed as: [CLS] + sentence A + [SEP] + sentence B + [SEP].

- A ground truth similarity score, typically ranging between 0 and 1 (or 0 and 5, depending on the dataset), is associated with this pair.

-

BERT's Output:

- After passing the input through BERT, the [CLS] token's representation is extracted.

- This representation is then passed through a dense layer to produce a continuous value (the predicted similarity score).

-

Loss Calculation:

- The predicted similarity score is compared to the ground truth similarity score.

- Mean Squared Error (MSE) or another regression loss is used to measure the difference between the predicted and true values.

Example for Training:🔗

- Sentence A: "A boy is playing soccer."

- Sentence B: "A child is playing football."

- Ground Truth Similarity Score: 4.7 (out of 5)

The input to BERT would be: [CLS] A boy is playing soccer. [SEP] A child is playing football. [SEP]

BERT would process this input and produce a predicted similarity score, say 4.5. The loss would be calculated based on the difference between this predicted value (4.5) and the ground truth value (4.7).

Inference Phase:🔗

-

Input Preparation:

- Similar to training, the two sentences are passed as: [CLS] + sentence A + [SEP] + sentence B + [SEP].

-

Predicting Similarity Score:

- The [CLS] token's representation is extracted and passed through the dense layer to get the predicted similarity score.

Example for Inference:🔗

- Sentence A: "A boy is playing soccer."

- Sentence B: "A child is playing a game."

Based on the relationship between the sentences and the trained model, BERT might predict a similarity score of, say, 3.8.

The goal in Sentence Pair Regression tasks like STS is to accurately predict the degree of similarity between two sentences, represented as a continuous value.

COMING SOON ! ! !

Till Then, you can Subscribe to Us.

Get the latest updates, exclusive content and special offers delivered directly to your mailbox. Subscribe now!